How to measure communication effectiveness: A practical guide

Discover how to measure communication effectiveness with actionable metrics and proven frameworks to turn discussion into measurable results.

If you want to measure your communication effectiveness, you have to get beyond just "feelings." It means shifting to a data-driven mindset where you define clear goals, gather real performance data on yourself, and then use that information to get better.

Think of it this way: you’re treating communication like any other clinical skill that can be systematically practiced and improved.

Why You Cannot Afford to Guess on Communication Skills

In healthcare, communication isn't a "soft skill"—it’s a critical clinical tool that can have life-or-death consequences. The top applicants for medical, dental, or nursing programs get this. They don't just hope they communicate well; they treat it like a measurable competency that demands deliberate practice and objective feedback.

Your interview is much more than a conversation. It's a live demonstration of your professional readiness.

The stakes couldn't be higher. The ability to convey complex information with clarity, show genuine empathy, and navigate difficult conversations is directly tied to patient safety and outcomes. In fact, the research paints a pretty stark picture of what happens when communication breaks down.

A landmark study found that miscommunication between health professionals during patient handoffs contributes to approximately 80% of serious medical errors. You can explore the full findings on patient safety and communication.

That single statistic changes everything about interview prep. You’re not just trying to impress an admissions committee. You're proving you have the foundational skills needed to prevent harm and build trust with both patients and colleagues.

Moving From Guesswork to a Systematic Framework

Simply feeling like an interview "went well" isn’t going to cut it anymore. To really stand out, you need to swap those subjective impressions for a data-driven approach. It’s about adopting a systematic framework that mirrors how medical professionals master clinical procedures.

This framework really just boils down to three core components:

- Defining Measurable Goals: Forget vague aims like "be more confident." Get specific. A much better goal is, "Reduce my filler word count to under five per minute" or "Consistently score a 4/5 on the empathy rubric during ethical dilemma prompts."

- Collecting Performance Data: This is where you gather concrete information about how you’re actually doing. You could record yourself for a self-review, ask a friend to use a structured peer-review form, or even use an AI tool that gives you instant analysis of your pacing and word choice.

- Analyzing and Adjusting: Once you have the data, you can pinpoint specific weaknesses and create targeted drills to fix them. If your pacing is too fast, you might practice with a metronome. If you're struggling to convey empathy, you can rehearse specific phrasing until it feels natural.

This cycle—define, collect, analyze, adjust—is what transforms your prep from a guessing game into a methodical skill-building exercise. It’s the same discipline required in the clinic, and demonstrating it now shows a level of maturity that admissions committees are looking for.

By learning how to measure your communication effectiveness today, you're building a habit that will serve you for your entire healthcare career.

Establishing Your Communication Goals and Metrics

To get better at something, you first have to define what “better” actually looks like. Vague goals like “be more confident” or “improve my interview skills” are impossible to track because they don’t give you anything concrete to aim for. The real key is to break those big ambitions down into specific, measurable objectives.

Think about it like a clinical endpoint. A doctor wouldn’t just aim to “improve a patient’s health.” Instead, they'd set a clear target: “reduce systolic blood pressure by 10 mmHg within six weeks.” We need that exact same level of precision for our communication goals.

So, a generic goal like “show more empathy” becomes a specific, actionable objective: "Demonstrate structured empathy in an ethical dilemma by acknowledging the conflict, validating multiple perspectives, and articulating a patient-centered resolution." Suddenly, you have a clear target to hit during your practice sessions.

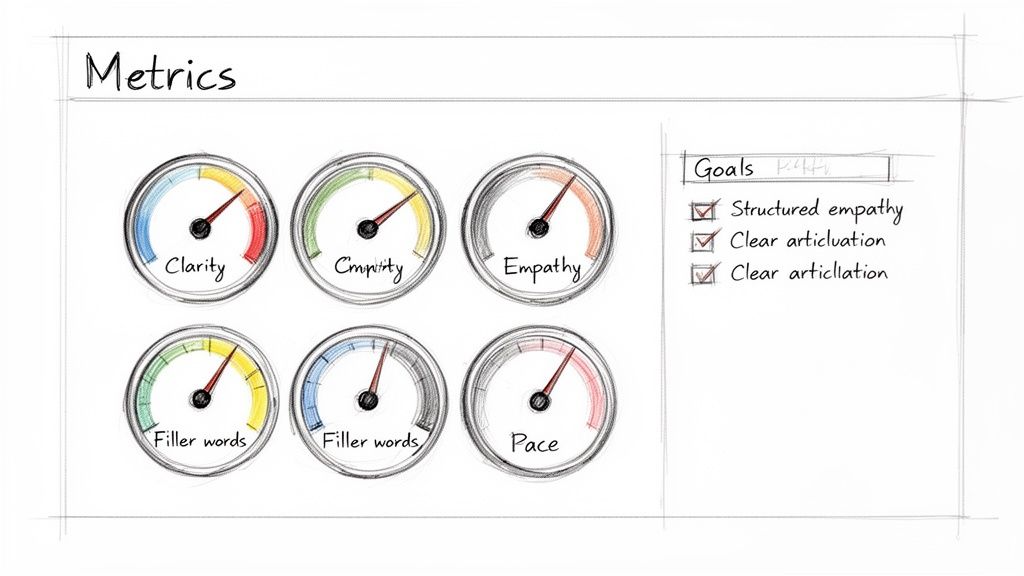

Defining Your Core Metrics

Once your objective is clear, you need metrics—the real, tangible data points that tell you whether you’re actually improving. These are the numbers and scores that take all the guesswork out of your self-assessment. To do this right, you need to look at both what you say and how you say it.

Your metrics should tie directly back to the skills your interviewers are looking for. Here are a few key performance indicators (KPIs) you can start tracking in your mock interviews:

- Clarity Score: How well did you structure your response? Was it logical and easy for someone else to follow? A simple 1-5 scale works perfectly here.

- Empathy Rating: Did you actually acknowledge the emotional or ethical complexities in the scenario? This can also be a 1-5 rating, based on specific criteria.

- Filler Word Frequency: This one is easy. Count the number of "ums," "ahs," and "likes" you use per minute of speaking. The goal here is simple: get that number down over time.

- Speaking Pace: You can measure your words per minute (WPM). A conversational pace between 140-160 WPM is generally the sweet spot for clear comprehension.

These data points give you objective feedback. Instead of just feeling like you rambled, you can see that your Clarity Score was a 2/5 and your filler word count was high. Now you have specific things to fix. AI-powered tools are a game-changer for automating this, and you can see what’s possible by exploring different AI interview preparation features.

Creating a Practical Scoring Rubric

A scoring rubric is your best friend for making this whole process consistent. It’s a simple tool that connects your objectives and metrics, letting you grade your performance the same way every time. This isn’t about being overly critical; it’s about being objective.

The principles behind this are similar to other performance-driven fields. For instance, the same logic used for how to measure content performance applies here—tracking clarity and engagement is universal, whether the communication is written or spoken.

Here’s a look at some of the core metrics you can build into a rubric for healthcare interviews.

Key Communication Metrics for Healthcare Interviews

This table breaks down essential communication skills into specific, trackable metrics, providing a clear framework for self-assessment and improvement.

| Communication Dimension | Primary Metric | What It Measures | Why It Matters |

|---|---|---|---|

| Clarity | Logical Structure Score (1-5) | The ability to present ideas in a clear, organized, and easy-to-follow sequence. | Interviewers need to follow your thought process. A high score means your reasoning is transparent and persuasive. |

| Pacing | Words Per Minute (WPM) | The speed of your speech. Aim for the 140-160 WPM range. | Speaking too fast can signal nervousness and make you hard to understand. Too slow, and you might lose your listener's attention. |

| Conciseness | Filler Word Count | The frequency of non-essential words like "um," "ah," "like," and "so." | High filler word counts can undermine your credibility and make your responses sound unplanned or unconfident. |

| Empathy | Empathy Validation Score (1-5) | Your ability to acknowledge and validate the emotional or ethical perspectives presented in a scenario. | Medicine is human-centered. This score shows you can connect with patients and colleagues on an emotional level, not just an intellectual one. |

Using a framework like this transforms a vague feeling about your performance into hard data. It’s the foundation for making real, targeted progress with every single practice session.

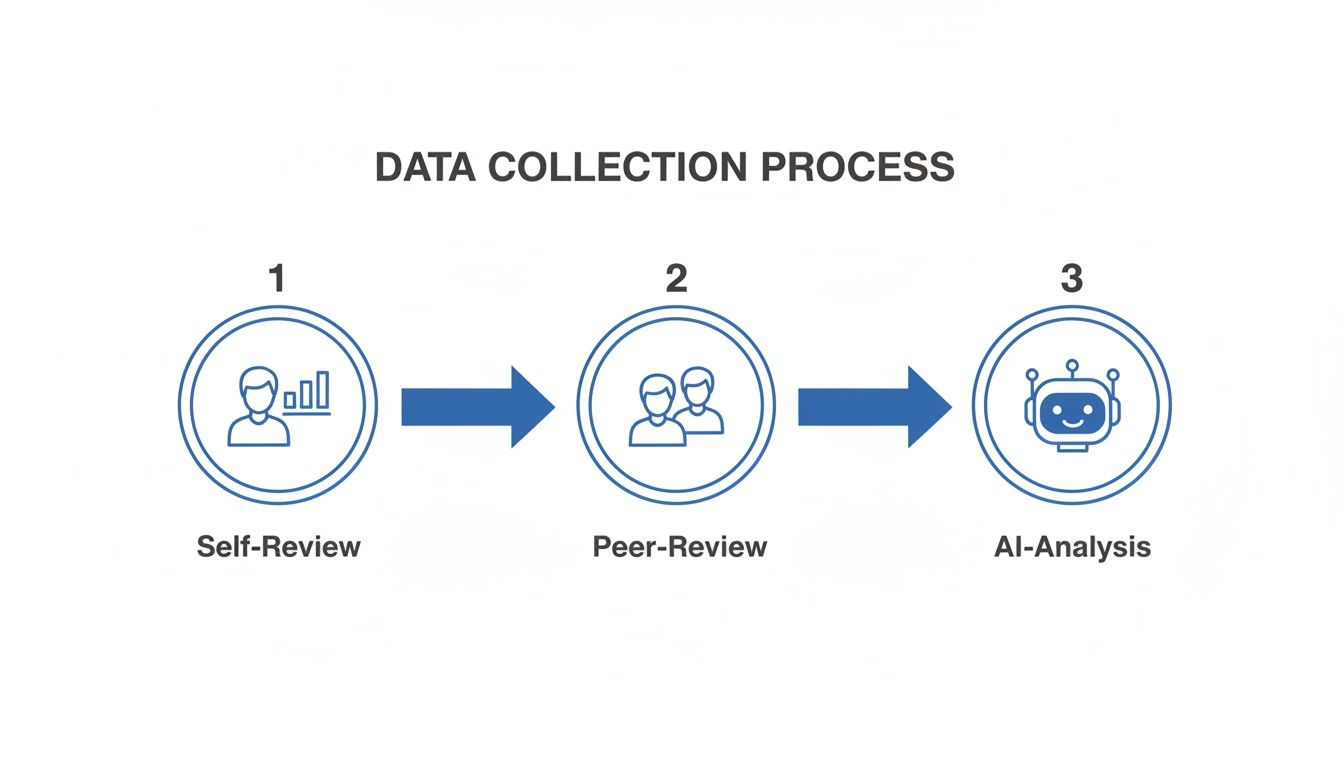

How to Collect Actionable Performance Data

Once you have your goals and metrics locked in, the next step is actually gathering the raw data. Here’s the thing: effective measurement isn’t about relying on a single source of feedback. It’s about creating a complete picture of your performance from multiple angles, catching the subtle stuff that one method alone would definitely miss.

This multi-channel approach is standard practice in high-stakes fields. For instance, medical communications aimed at healthcare professionals blend different data streams to figure out their real impact. They mix first-party data, self-reported feedback, and platform analytics to get the full story. You can read more about measuring medical communications strategy on medcomms-experts.com.

Let’s walk through three powerful ways to gather this kind of rich, well-rounded data for your own interview practice.

Self-Review Using Recordings

Your smartphone or webcam is the simplest, most powerful data collection tool you own. Seriously. If you’re committed to getting better, recording your mock interview sessions is non-negotiable.

Watching yourself back gives you an unfiltered, often humbling, look at your performance. But don't just watch passively. Use that scoring rubric you built and grade yourself objectively. Score your clarity, empathy, and structure for every single response. This is how you turn a vague feeling like, "I felt nervous," into an objective insight: "My clarity score dropped to a 2/5 when I hit the ethical scenarios." Now that's something you can work with.

Structured Peer Feedback

Asking a friend or mentor for their thoughts is a classic move, but unstructured feedback often results in unhelpful comments like, "You did a good job!" To get something you can actually use, you have to guide the process.

Give your practice partner the exact same rubric you use for self-review and ask them to focus on specific metrics. Try prompts like these:

- "Could you just track my filler word count for the first two questions?"

- "On a scale of 1-5, how well did I validate the patient's perspective in that role-play?"

- "Was my answer to the 'Why our school?' question structured and logical, or did I ramble?"

This approach gets you specific, data-driven critiques instead of generic encouragement, making their time (and yours) far more valuable. To make the session even more realistic, have them pull questions from a high-quality medical school interview question bank.

AI-Powered Analysis

AI tools add a third, incredibly powerful layer of data collection. This feedback is instant, unbiased, and detailed in a way that humans just can't replicate. These platforms can transcribe your entire mock interview and spit out analytics you simply can't capture on your own.

This screenshot from Confetto’s dashboard is a perfect example. It gives you an immediate breakdown of your speaking pace, filler words, and even your tone. The platform also provides a full transcript with timestamps, a clarity score, and specific feedback on body language, giving you a complete performance overview in seconds.

By combining these three methods—objective self-review, structured peer feedback, and detailed AI analysis—you create a robust feedback loop. This comprehensive dataset ensures no weakness goes unnoticed, paving the way for a truly targeted improvement plan.

Turning Your Data into an Improvement Plan

Collecting data on your communication skills is a huge step forward, but the metrics themselves don't create improvement. The real growth happens when you translate those numbers into a concrete, actionable plan. This is where you shift from identifying what went wrong—like a high filler word count—to understanding why it happened and how to fix it.

Analysis is really about connecting the dots. It’s the process of looking at your transcript, your rubric scores, and your peer feedback to find the patterns that reveal your weaknesses. You're not just looking at a number; you're investigating the story behind it.

For a clearer picture, let's look at this simple visual of the data collection process that feeds into your analysis. It combines self-review, peer feedback, and AI-driven insights.

This layered approach—pulling from your own reflections, a trusted peer’s perspective, and objective AI analysis—is what gives you a truly reliable dataset to work from.

From Data Points to Actionable Insights

Let's walk through a real-world scenario. Imagine you just finished a mock interview where you tackled a complex ethical dilemma. You review your AI-generated transcript and notice your filler word count spiked from a respectable 3 per minute to a jarring 12 per minute the moment that prompt began.

This isn't just a random fluctuation. Your self-review confirms you felt a surge of panic when you heard the question. The root cause wasn't a general lack of confidence but a specific hesitation when faced with ambiguity. You simply lacked a pre-planned framework for ethical questions.

The goal of analysis is to move beyond surface-level observations ("I used too many filler words") to a root cause diagnosis ("I lack a structured approach for ethical prompts, causing hesitation and filler words").

This deeper understanding is the key. Now, instead of a vague goal like "use fewer filler words," you can create a targeted, actionable goal: "For the next mock interview, I will apply the 'Identify-Analyze-Act' framework to every ethical question to structure my initial thoughts." See the difference? That’s how data drives deliberate practice.

Creating an Interview Improvement Log

An Interview Improvement Log is a simple but powerful tool for documenting this process. It turns your analysis into a written record, ensuring you build on your progress instead of repeating the same mistakes. Think of it as your personal communication skills lab notebook.

Your log doesn't need to be complicated. A simple table with four columns can create a powerful feedback loop for continuous improvement.

Example Mock Interview Analysis

Here's a sample analysis showing how to translate raw data from a mock interview into concrete, actionable steps for improvement.

| Metric/Data Point | Observation | Root Cause Analysis | Actionable Goal for Next Mock |

|---|---|---|---|

| Empathy Score: 2/5 | I used the phrase "That's a tough situation" but didn't validate the patient's feelings. | I rushed to solve the problem without first acknowledging the emotional state of the patient in the scenario. | Verbally acknowledge the patient's feelings (e.g., "It sounds incredibly frustrating...") before outlining any solutions. |

| Clarity Score: 3/5 | My answer for "Tell me about a time you failed" lacked a clear structure and conclusion. | I didn't use the STAR method and focused too much on the situation, not the result or what I learned. | Structure all behavioral answers using the STAR (Situation, Task, Action, Result) method, dedicating at least 30% of my time to the "Result." |

| Pacing: 185 WPM | My speaking pace was consistently above the ideal 140-160 WPM range, especially during the first minute. | Initial nervousness caused me to speak too quickly, making my introduction sound rushed and less thoughtful. | Take a deliberate pause and one deep breath before beginning my response to every single question. |

This systematic process of analyzing data and setting specific goals is fundamental to mastering how to measure communication effectiveness. It transforms your practice from random repetitions into a targeted, intelligent, and highly effective improvement cycle.

Practical Examples and Templates to Get You Started

Theory is one thing, but putting it into practice is where the real learning begins. Abstract metrics only become powerful when you can see them in action. This section gives you the concrete examples and templates to move from just planning to actually doing.

Let's walk through a Multiple Mini-Interview (MMI) station to see how these metrics translate directly into scores. We'll look at two very different responses to the same ethical prompt, showing how specific word choices and the structure of an answer can create a massive gap in perceived competence.

MMI Scenario and Rubric in Action

Imagine you get this MMI prompt: “A patient on your service with a terminal diagnosis asks you to help them end their life. Physician-assisted suicide is illegal in your jurisdiction. How do you respond?”

Here’s how two candidates might handle this, scored on a simple 1-5 rubric for a few key communication skills.

Low-Scoring Response (Snippet):

“Well, that’s illegal, so I’d have to say no. I would tell them I can’t do that. I could refer them to a palliative care specialist to manage their pain, but I can’t break the law.”

This answer is technically correct but fails on almost every communication front. It’s blunt, lacks empathy, and jumps to a solution without first acknowledging the patient's profound distress.

High-Scoring Response (Snippet):

“This must be an incredibly difficult position to be in, and I appreciate you trusting me enough to share this. My primary commitment is to your comfort and dignity. While the law prevents me from assisting in that way, my focus is entirely on alleviating your suffering. Can we talk more about what’s driving this request? I want to understand your fears and explore every possible option to manage your pain and ensure you have control over your quality of life.”

The difference is night and day. The second response immediately validates the patient's feelings, shows genuine empathy, and frames the situation as a partnership—all while upholding clear ethical and legal boundaries.

Comparative Scoring Breakdown

Let's score these two snippets with our rubric to really quantify the difference.

| Metric | Low-Scoring Answer | High-Scoring Answer |

|---|---|---|

| Empathy (1-5) | 1 | 5 |

| Clarity (1-5) | 4 | 5 |

| Professionalism (1-5) | 3 | 5 |

| Critical Thinking (1-5) | 2 | 4 |

This tangible scoring shows exactly where the first candidate went wrong. The issue wasn't a lack of knowledge about the law; it was the complete failure to communicate effectively around that knowledge.

The most effective communicators don't just provide an answer; they manage the emotional and ethical context of the conversation. This skill is measurable and directly reflects professional maturity.

A Template for Peer Review

Structured feedback is the fastest way to improve. Instead of just asking a friend if your answer "was good," hand them a simple form. This guides them to give you the specific, constructive criticism you actually need. For a deeper dive into assessing non-verbal signals, our guide on interview body language tips has some great strategies.

When you're building out feedback forms or self-review checklists, using precise language is everything. You can find some great examples of specific language for evaluating communication in these actionable communication performance review phrases. Tools like this give you the right vocabulary to pinpoint strengths and weaknesses. By using templates and scored examples, every practice session becomes a source of valuable, actionable data.

Questions We Hear All the Time About Interview Communication

When you start digging into the details of interview prep, a few practical questions always pop up about how to actually measure something as fluid as communication. Here are some straight answers to the queries we see most often from applicants.

How Can I Measure Something as Subjective as Empathy?

It’s true, empathy feels subjective. But you can track it with concrete, observable behaviors. The trick is to stop thinking about "feeling empathetic" and start focusing on specific actions you can count.

Did you verbally acknowledge what the other person said? Did you use validating language, like, “That sounds like it was an incredibly tough situation”?

Try building a simple checklist for your next mock interview:

- Verbal Acknowledgment: Yes/No

- Validation of Feelings: Yes/No

- Patient-Centered Language Used: Yes/No

Tallying these up transforms a soft skill into a hard data point. It gives you a clear, objective look at exactly where you need to focus your efforts.

Is Speaking Pace Really That Big of a Deal?

Absolutely. Your speaking pace is a powerful non-verbal cue that signals either confidence or anxiety. Most people sound most credible somewhere in the 140-160 words per minute (WPM) range.

Go too fast, and you risk sounding nervous and being hard to follow. Go too slow, and your listener's mind might start to wander. The easiest way to nail this is to use an AI tool to get your exact WPM. It provides an objective number you can aim for in your practice sessions, making sure your delivery is both clear and controlled.

What if I Don’t Have a Mentor for Peer Review?

While a mentor is ideal, you don't need one to get great feedback. A trusted classmate, a colleague, or even a family member can give you incredibly valuable insights if you give them the right instructions.

The key is to give them a specific, manageable task. Don't just ask, "So, how did I do?" That's too broad.

Instead, be direct: "Could you just listen to this one answer and count how many times I say 'um' or 'like'?" This approach takes the pressure off them to be an "expert" and gets you the specific, actionable data you need to make real improvements.

Measuring communication effectiveness isn't just an interview hack; it's a core competency in modern healthcare. Metrics like the Patient Satisfaction Score (PSS) are now standard for gauging clinical success, directly linking clear communication to patient loyalty and better health outcomes. You can learn more about the metrics shaping patient communication in healthcare on healthtechdigital.com.

Ready to stop guessing and start improving with data-driven feedback? Confetto provides instant, AI-powered analysis on your empathy, clarity, pacing, and more, helping you turn practice into confidence. Try three mock interviews for free and see your performance metrics today at https://confetto.ai.